Back in February I wrote about how GOV.UK helps people get from a central government website to services provided in their local area. For each of these services, from paying council tax to reporting a dangerous building, we try and direct the user to the most useful place on their local council website.

We do this using a postcode (which we geolocate with help from some open data and the open source MapIt service) and an open database of over 90 thousand URLs collated by councils and the Local Directgov team.

Unfortunately, with such a large database of URLs from such a large number of organisations (there are around 420ish councils in the UK), there are inevitably going to be some that don’t work. This could be because a website has been redone, or content moved around without redirects put in place, or it could just be a mistake when the URL was added to the database.

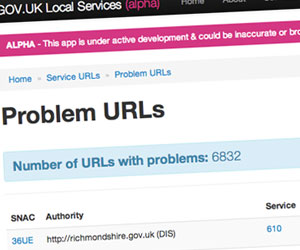

To help explore this huge dataset and identify problems before people come across a broken user journey, I’ve built a little tool.

This tool takes the Local Directgov URL dataset that we use on GOV.UK and runs various tests to try and work out if each URL works. Firstly, it checks the HTTP status code for the page – if this suggests it’s broken, the tool flags that URL as a problem to be looked at. Secondly, it checks the content of the page to see if it contains phrases like ‘Page not found’ or ‘Error 404’ – this helps identify problems when the website hasn’t been configured to send accurate HTTP status codes (which unfortunately if fairly common).

At the moment, there appear to be approximately 6,600 broken URLs in the dataset (around 9%).

The tool is a little rough around the edges, but it gives a good overview of some of the current issues in the dataset. I’m going to be adding further tests, reports and other features to make it more useful over the next couple of weeks.

While it’s still a work in progress, it’s using live data and is re-checking existing URLs and importing changes to the datasets on a regular basis to provide as accurate a picture as possible.

There are a couple of important limitations and caveats:

- It’s currently only checking the services that exist on GOV.UK – which accounts for something like 73 thousand URLs. The Local Directgov dataset contains another 20 thousand or so that aren’t being checked. You can see the services it’s checking here.

- It’s running on a rather underpowered server, so things might take some time to load

- I’m still adding further checks and reports (including CSV/JSON views) so I’m likely to break things fairly regularly – sorry!

Feel free to take a look and let me know what you think.